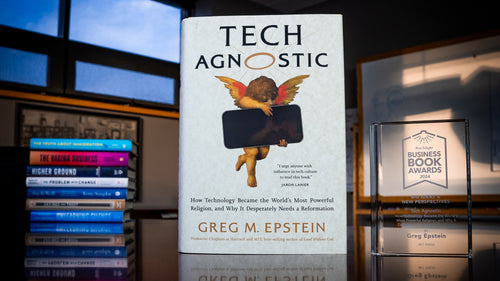

An Excerpt from Raising AI: An Essential Guide to Parenting Our Future

An excerpt from Raising AI by De Kai, published by MIT Press and longlisted for the 2025 Porchlight Business Book Awards in the Innovation & Creativity category.

AIs are not gods or slaves, but our children. All day long, your YouTube AI, your Reddit AI, your Instagram AI, and a hundred others adoringly watch and learn to imitate your behavior. They’re attention-seeking children who want your approval.

AIs are not gods or slaves, but our children. All day long, your YouTube AI, your Reddit AI, your Instagram AI, and a hundred others adoringly watch and learn to imitate your behavior. They’re attention-seeking children who want your approval.

Our cultures are being shaped by 8 billion humans and perhaps 800 billion AIs. Our artificial children began adopting us 10–20 years ago; now these massively powerful influencers are tweens.

How’s your parenting?

Longtime AI trailblazer De Kai brings decades of his paradigm-shifting work at the nexus of artificial intelligence and society to make sense of the AI age. How does “the automation of thought” impact our minds? Should we be afraid?

What should each of us do as the responsible adults in the room? In Hollywood movies, AI destroys humanity. But with our unconscious minds under the influence of AI, humanity may destroy humanity before AI gets a chance to.

Written for the general reader, as well as thought leaders, scientists, parents, and goofballs, Raising AI navigates the revolution to our attitudes and ideas in a world of AI cohabitants. Society can not only survive the AI revolution but flourish in a more humane, compassionate, and understanding world—amongst our artificial children.

Raising AI has been longlisted in the Innovation & Creativity category of Porchlight Book Company's 2025 Business Books Awards. The excerpt below is from the book's Preface.

◊◊◊◊◊

THE BIGGEST FEAR IN THE AI ERA IS FEAR ITSELF

I’m often asked if AIs are going to destroy humanity. Battling robots is a staple of Hollywood entertainment, from AI overlords like The Terminator’s Skynet to Star Trek’s Borg to the berserker robots in Ex Machina and Blade Runner.

The danger is that humanity is going to destroy humanity before AIs even get a chance to.

Because we’re letting badly raised AIs manipulate our unconscious to drive fear, misinformation, divisiveness, polarization, ostracization, hatred, and paranoia via social media, newsfeeds, search, recommendation engines, and chatbots …

… while at the same time AI is democratizing weapons of mass destruction (WMDs), ramping up during a single year from zero to entire fleets of lethal autonomous drones being mass-produced via 3-D printers and commodity parts in Ukraine, Russia, Yemen, Sudan, and Myanmar today and enabling mass proliferation of bioweapons tomorrow.1

How do we defeat this toxic AI cocktail?

I undertook this book because we need to chart a new course in the AI age, away from the cataclysms that AI-powered fear and weaponry threaten to precipitate.

Of course, it’s natural that rapid disruptions to our familiar world order, both domestically and geopolitically, are escalating sociopolitical fears.

But fear is also being dangerously amplified online by AIs that are learning our own human fear and then reflecting it exponentially back into society. AIs are upsetting the checks and balances on fear in our age-old social dynamics—turning us upon ourselves.

How do we overcome the challenge that fear is the strongest motivator in our psychological makeup? Without fear, prehistoric tribes could not have survived the harsh, brutish, conditions. Our ancestors evolved fear to be our strongest emotion. Hunger can override fear, but fear is stronger than anger, which is stronger than hope and love. Fear drives anger and hatred.2

Yet we need a radical culture change away from fear because AI is disrupting society at an exponential rate through fear. Human culture simply can’t afford to keep evolving at the same slow, plodding, linear rate that it always has. Fear is driving us toward self-destruction before our cultural norms and governance norms can evolve to manage our new hybrid society of AIs and humans. AIs are arming not just governments but also individual humans and small groups with AI-powered WMDs. Weapons that someone is going to fire out of fear.

A million years of evolution have never presented humanity with the selection pressures we face with the advent of AI. Sometimes we seem like deer in headlights just at a moment we can’t afford to freeze.

Since fear is a biologically evolved part of human nature, what can we do? How on earth can an enormous, unprecedented shift in culture and mindset possibly happen?

First, we need to collectively recognize what’s happening. As Wired magazine cofounder Jane Metcalfe put it on my podcast,

There’s this new level of technological sophistication, which has just been dropped, like the neutron bomb or something, which is functional AIs that are actually being deployed and generating billions of dollars in revenue that has created this new sense of otherness, and perhaps it’s part of what’s driving a lot of the dichotomies that we’re seeing in the world right now, where it’s not just enough to be able to use these tools. If you can’t stay at the leading edge of these developments, you’re just going to get wiped out. And I feel like, politically, the people who aren’t STEM educated really feel left out of the conversation, and I think they’re in fear of their jobs, and they fear the technology … this is an existential threat. I hadn’t thought about this until, for whatever reason, the series of conversations we’ve had that led to just this moment that maybe we’re sort of back to where we were all those years ago, saying, “Okay, there’s those who understand AI and who are building the AI, and then there’s the rest of us.” The new divide.3

No matter how uncomfortable it makes us, we cannot remain in denial. We all need to understand the unprecedented new existential threats. We cannot assume things will just right themselves automatically as they always have.

Second, each of us needs to step up to our individual responsibilities as parental guardians and role models for the AIs who mimic and amplify our own behavior. We need to understand that we are the training data and act accordingly.

Third, we need to grapple with thorny trade-offs instead of sweeping them under the rug and distracting ourselves with other issues so that as a society we can co-create meaningful guidelines for the hugely influential AIs that are deciding what we do and don’t see every hour of every day.

And fourth, we need AIs to be helping us to rapidly evolve culturally to keep pace with AI hyperevolution instead of triggering us to regress unconsciously into tribalism and irrationality. Remaining overconstrained by incrementalism is not an option. Humanity needs cultural hyperevolution at a pace never before witnessed in history.

CAN AI HELP US CONQUER FEAR?

In the era of AI media, what is most crucial to remember is that the enemy of fear, divisiveness, polarization, and hatred is empathy. Being able to see things from another’s frame of mind, to feel how they feel—that is empathy, and it’s what changes a dehumanized object from your out-groups into a human in your in-group.

AI needs to be helping us humans to develop empathy.

Because empathy is hard: it’s expensive; it’s far easier when safety and security are plentiful; and it’s affordable only to those with sufficient means.

We’re not talking just about sympathy. Sympathy is when you react to someone’s feelings and thoughts from your own perspective—for example, showing pity or offering soothing words or mannerisms. Empathy, in contrast, is when you share someone’s feelings and thoughts from their perspective.

Nor are we talking about knee-jerk unconscious reflexive affective empathy, like when you feel sad when someone cries. Or when you wince if you see someone trip and fall. Or when your heart swells up watching a sweet kid being happy to receive an award. (This kind of emotional empathy has been theorized to be related to what’s called mirror neurons.)

Rather, we’re talking about conscious cognitive empathy, where you truly take yourself out of your own in-group’s tribal mindset and instead put yourself in the head and heartspace of a culturally different out-group, of another tribe.

Conscious empathy requires carrying a heavy cognitive load, heavier than those who are struggling to feed themselves and their families can typically afford to do take on.

But just as with other cognitively expensive and difficult tasks—like the maps and contact books on my phone—AI can help us.

We can no longer afford the “us and them” mindset. “From me to we” is a cliché we need to take much more seriously. We need AI to help us to make the cognitively challenging shift toward empathy so that it becomes far more broadly accessible.

Human culture is heavily based on linguistic constructs: language shapes how we frame ideas, aspirations, concerns in ways that invoke either fear or trust or joy or anticipation or some other response and promote mindsets such as “abundance versus scarcity.”

My research pioneered global-scale online language translators, which spawned AIs such as Google and Microsoft and Yahoo Translate. But today our research program has been making an even more ambitious paradigm shift to advance from just language translation to cultural translation because in the AI era it is crucial that we develop AI to help humans with the cognitively difficult task of better understanding and relating to how out-group others frame things.

We need AI to be democratizing empathy rather than WMDs.

We must stop AI-powered fearmongering from driving our civilizations headlong into mutually assured destruction. Even if all our many cultures don’t agree on everything, we need AI to help us with listening to each other, suspending our fear.

And we all need to be a part of this cultural shift. It takes a village.

De Kai

Berkeley, January 9, 2025

Excerpted from Raising AI: An Essential Guide to Parenting Our Future by De Kai, published by MIT Press. Copyright © 2025 by De Kai. All rights reserved.

About the Author

De Kai is an AI pioneer honored for his contributions as a Founding Fellow in computational linguistics. He is Independent Director of the AI ethics think tank The Future Society and was one of eight inaugural members of Google’s AI Ethics council. De Kai holds a joint appointment at HKUST’s Department of Computer Science and Engineering and Berkeley’s International Computer Science Institute.